Consistent Characters¶

BBox-SAM2¶

[Q]

Good morning, Emily. I'm ready to tackle that bbox/SAM face swap workflow. In this picture I have 2 characters: one standing, and one sitting. Standing is Celeste, sitting is Charlie. I hope to be able to assign faces to the, using image to image... it can be done in separate instances (not all at once). Doable?

[Emily]

Yes — absolutely doable, and doing it in two separate passes is the right way for this image.

You’ve basically got two problems to solve cleanly:

- Isolate only the correct person’s face/head (standing Celeste vs sitting Charlie)

- Apply an ID/face swap only inside that mask, while the rest of the frame remains frozen

A bbox→SAM pipeline is ideal here because the scene is busy and you don’t want a global face detector to “helpfully” touch both people.

Below is a practical ComfyUI approach that works whether you’re doing classic SDXL inpaint, Flux/“edit” style, or any other img2img method that respects a mask.

The core plan¶

(two passes)

1) Swap Celeste (standing)

- Detect bboxes (you should get 2 persons, or 2 faces)

- Pick the bbox that corresponds to the standing figure (upper-left / behind)

- Use that bbox as the prompt box for SAM → get a clean mask

- Inpaint / face-swap only inside that mask

- Save the result

2) Swap Charlie (sitting)

- Feed Pass 1’s output back in as the new input image

- Repeat bbox → select the sitting figure (right/front)

- SAM mask → inpaint / face-swap

- Done

This avoids cross-contamination where your “Celeste face” embedding accidentally leaks into Charlie’s face region (or vice versa).

Masking setup (bbox → SAM)¶

1) Detection node options

Pick one detector strategy:

Option A (usually easiest): Person detector

- Pros: reliable when faces are partly angled, hair covers cheeks, etc.

- Cons: bbox is larger, so SAM may segment more than you want (shoulders).

Use:

- Ultralytics Detector Provider (BBOX) → model:

yolov8 person(or similar) - Output: list of bboxes (should be 2 here)

Option B (more precise): Face detector

- Pros: bbox is tight on the head/face; SAM segmentation is cleaner

- Cons: profile/angle can sometimes miss (but your sitting person is very detectable).

Use:

- Ultralytics / face bbox model (whatever you’ve got installed that’s stable)

In this image: I’d start with face bboxes if available; otherwise person bboxes are fine.

2) Selecting the correct bbox

You’ll get two boxes. You need a deterministic way to select them.

Common approaches in ComfyUI graphs:

- BBox Selector (choose by index)

- Sort bboxes by X position (leftmost vs rightmost)

- Sort by Y position (topmost vs bottommost)

- Preview bboxes and pick index manually

For this specific frame:

- Celeste (standing) = more left and more top

- Charlie (sitting) = more right and more center/bottom

So you can do something like:

-

Sort by X center:

-

leftmost bbox → Celeste

- rightmost bbox → Charlie

That tends to be stable.

3) Feeding bbox to SAM

Use a SAM box-prompt workflow:

- SAM Model Loader

-

SAM Predictor (Box Prompt) (naming varies by node pack)

-

Inputs: image + bbox

- Output: mask

Then add mask refinement:

-

Dilate/Erode (tiny amounts)

-

For face swap: dilate slightly so you include edges cleanly

-

Feather / Blur mask edge (important)

-

This is what stops the “sticker face” seam

Rule of thumb for face/head work:

- Dilate: small

- Feather: moderate You want the mask to include a bit of hairline/cheek edge, but not the blazer or background.

Apply the face swap¶

(METHODS inside the mask)

There are two broad ways people do this in ComfyUI:

1) Inpaint with an identity condition (most controllable)

You use your preferred identity driver:

- InstantID / FaceID / IP-Adapter FaceID / InsightFace embedding …and run an inpaint KSampler with the SAM mask.

Why this is great: the mask clamps the effect.

Suggested settings (starting points):

-

Denoise: 0.30–0.55

-

Lower = preserves lighting/structure, less chance of “new person”

- Steps: 12–24 (depending on model; ZiT can go lower)

-

CFG:

-

SDXL-style: moderate

- ZiT-style: you already know the drill (often ~1.6–2.2 behaves)

Prompt discipline matters (keep it boring):

- Positive: “same scene, same lighting, same pose, natural skin texture, realistic”

- Add one instruction: “replace only the face of the standing woman” (or sitting girl)

- Negative: “extra face, double face, deformed eyes, age change, makeup change, smile”

If you’re using a strong identity injector, you often need less prompt, not more.

2) A dedicated face-swap node, then composite with the SAM mask

Some workflows do:

- face swap tool runs on whole image (or face crop)

- then composite output back into the original using the SAM mask

This can work well if your swapper is very good, but it’s more fiddly with blending and color matching.

If you tell me which swapper you’re using (ReActor? InsightFace swap? something else?), I can steer you to the cleanest variant.

Practical “gotchas”¶

for this image (so you don’t waste hours)

1) The sitting face is a side profile

Side profiles can cause:

- eye/eyelash distortions

- nose bridge “rebuilds”

- mouth corner weirdness

Fix: keep denoise lower (0.30–0.40) on Charlie’s pass, and make the mask a touch tighter to the face (less cheek/ear area).

2) Hairline continuity is everything

If your mask cuts across the hairline sharply, you’ll get the “helmet seam.”

Fix:

- Expand mask slightly into hairline

- Feather edge more than you think you need

- Don’t let the model “invent” new hair — keep denoise moderate

3) Don’t let the standing person’s blazer get included

If SAM grabs blazer/neckline, you’ll suddenly get clothing drift.

Fix:

- After SAM, do a quick mask intersection with a tighter ellipse/circle around the face/head, or erode downward.

What I’d build¶

as a clean node stack (conceptual)

For each pass:

- Load Image (original for Pass 1; Pass 1 output for Pass 2)

- Ultralytics BBOX detect (person or face)

- BBox sort/select (left/top = Celeste; right = Charlie)

- SAM box-prompt → mask

- Mask refine (dilate + feather)

- Identity condition (your face reference image → embedding/InstantID/etc.)

- Inpaint KSampler (masked)

- Save Image

Quick sanity check¶

on “separate instances”

Doing them separately is not just doable — it’s best practice:

- You keep each identity injection “pure”

- You only debug one mask at a time

- You can tune denoise differently for each face (you’ll want that here)

If you tell me which exact nodes you’re using for identity (InstantID? IPAdapter FaceID? ReActor? something Flux-specific like Kontext/Qwen edit?), I’ll translate the above into a literal node-by-node recipe with the best parameter starting points for that stack — and a reliable bbox selection trick so Celeste/Charlie never get swapped accidentally.

SRPO-FaceOnly¶

[Q]

I'll do better... I'll send you a workflow that works well for even side view face swaps. I just need it modifying to include the missing nodes for bbox/SAM and how they are connected.

[Emily]

Perfect — I’ve opened your workflow and it’s exactly the kind of graph that’s easy to “surgically” upgrade with bbox→SAM, because you already have the cleanest place to inject a mask: right before the sampler.

Here’s the key observation from your JSON:

-

Inside the SRPO-FaceOnly subgraph, you do:

-

ImageScaleToTotalPixels (230) → VAEEncode (227) → SamplerCustomAdvanced (228)

- That means this is classic img2img in latent space, and the correct “face-only” way to clamp edits is:

✅ Use a mask-driven latent noise mask (so denoise only happens in the face region)

So we add bbox → SAM → mask refine → SetLatentNoiseMask, then feed that masked latent into your existing sampler.

I’ll describe it in two layers:

- Where to insert it in your exact graph (by node ID)

- What nodes to add and how to connect them (so you can drop in the versions you already have installed)

Also: here’s the workflow you sent, for reference:

1) Where this goes in your existing SRPO-FaceOnly subgraph

_ * Current chain (important part)

Inside SRPO-FaceOnly (the subgraph embedded in node 234 at top level):

- 230 ImageScaleToTotalPixels → outputs

IMAGE - 227 VAEEncode takes that

IMAGE→ outputsLATENT - 228 SamplerCustomAdvanced takes that

LATENTaslatent_image

_ * We will change exactly one link

Right now you have:

- 227 (LATENT) → 228 (latent_image)

We will replace it with:

- 227 (LATENT) → NEW: SetLatentNoiseMask → 228 (latent_image)

Everything else in your sampler stack stays untouched.

2) The bbox/SAM block you add (and how it connects)

_ * The “golden rule” for alignment

Your detector + SAM must see the same image that gets encoded into latent.

In your workflow, that’s the output of:

✅ 230 ImageScaleToTotalPixels

So we branch 230’s IMAGE output into the bbox/SAM chain.

3) Node-by-node wiring (the exact connections)

I’m going to name the nodes generically because node pack naming differs, but the sockets are consistent.

_ A) BBOX detection (person or face)

Input:

IMAGE= output of 230 ImageScaleToTotalPixels

Output:

- some form of

BBOXES/DETECTIONS

Typical nodes you might already have (based on your earlier mentions):

- Ultralytics Detector Provider (BBOX) (loads model)

- Ultralytics Detector (BBOX) (runs inference)

Connections:

230:IMAGE→BBOX Detector:image

If you have both person and face models available:

- Use face bbox for tighter SAM masks

- Use person bbox if face detection ever misses (profiles sometimes do)

- B) Select which bbox (standing Celeste vs sitting Charlie)

Add a selector node that lets you choose a specific detection:

- BBox Select / Choose Detection by Index

Add a simple PrimitiveInt called something like:

bbox_index

Connections:

BBOX Detector:bboxes→BBox Select:bboxesPrimitiveInt(bbox_index)→BBox Select:index

How you’ll use it:

- Run once and see which index corresponds to which person.

-

In this scene, very often:

-

index 0 = left/top (standing Celeste)

- index 1 = right/front (sitting Charlie) …but that depends on the detector’s ordering, so treat it as “discover once, then lock it”.

- C) SAM: box-prompt segmentation → face/head mask

You want the SAM node variant that supports box prompt (not points).

Nodes commonly look like:

- SAM Model Loader

- SAM Predictor (Box Prompt) or Segment Anything (Box)

Connections:

230:IMAGE→SAM:imageBBox Select:bbox→SAM:box(orbbox,rect, etc.)SAM Loader:model→SAM:model

Output you want:

MASK(best) orIMAGEmask (common) → then convert toMASK

If SAM outputs an image, add:

- Image to Mask / Mask from Image and connect:

SAM:mask_image→ImageToMask:image→ outputsMASK

- D) Mask refinement (this is what makes it look real)

Add, in this order:

-

Dilate/Erode (or “Grow/Shrink Mask”)

-

Slight dilate helps include hairline/cheek edge

-

Feather / Blur Mask

-

This prevents the “sticker seam”

Connections:

SAM:MASK→DilateErode:maskDilateErode:mask→MaskBlur/Feather:mask

Optional but very useful:

- Invert Mask (only if your mask is backwards)

- Mask Clamp/Threshold (only if SAM output is “gray and mushy”)

- E) Convert refined face mask into a latent noise mask

Now the crucial “ComfyUI-native” trick:

Use:

- SetLatentNoiseMask

Connections:

227:LATENT→SetLatentNoiseMask:latentsRefinedMask:MASK→SetLatentNoiseMask:maskSetLatentNoiseMask:LATENT→228:latent_image

That’s it. Your sampler will now denoise only inside the mask, using the same denoise value you already drive into the scheduler.

4) Exactly what to do for your two-person shot (two passes)

_ Pass 1: Celeste (standing)

- Set

bbox_indexto whichever box is the standing woman - Run

- Save

_ Pass 2: Charlie (sitting)

- Use the Pass 1 output as the new input image

- Change

bbox_indexto the other person - Run

- Save

This is the cleanest way to prevent identity bleed between the two faces.

5) Two small parameter notes (specific to your SRPO setup)

_ Your denoise is currently 0.15

That’s great for subtle edits, but face swaps sometimes need a little more freedom inside the face region.

Because you’re masking now, you can safely try:

- 0.22–0.35 for Charlie (profile face usually needs more)

- 0.18–0.28 for Celeste (front-ish tends to behave)

_ Mask edge quality matters more than steps

With a good feathered mask, you’ll get away with lower steps and keep lighting consistent.

6) If you want, I can produce a patched JSON — but I need one thing from your node list

I can generate a ready-to-import .json that adds the nodes and rewires 227 → SetLatentNoiseMask → 228, but ComfyUI graphs are picky: the node type strings must match your installed node pack names exactly.

So: tell me which SAM + bbox node pack you’re using (just the node names as they appear in your right-click menu), e.g.:

- “Ultralytics Detector Provider (BBOX)” exact name ✔

- The exact SAM nodes you use (e.g. “SAMLoader”, “SAMAutoMask”, “ImpactSAM”, etc.)

Once I’ve got those exact node names, I can write you a patched workflow file that drops straight in.

For now, the wiring above is 1:1 compatible with your workflow structure and uses the correct insertion point that your graph already sets up.

1st Patch¶

[Q]

I'm already lost at 3). Actually, if you send me a .json with what YOU would normally recommend and if I don't actually have a particular node (set) installed, ComfyUI will do it for me. Would that work for you?

[Emily]

Yep — I can send you a patched .json right now. One important correction first though:

ComfyUI will not auto-install missing custom nodes. What it will do is import the workflow and show the missing nodes in red, so you can then install the required node packs and everything will snap into place.

That said: I’ve made you a patched version of your workflow that adds a bbox → SAM → refined mask → SetLatentNoiseMask block inside your SRPO-FaceOnly subgraph, and rewires the sampler so it only denoises inside the SAM mask.

✅ Download¶

the patched workflow

Download ZZ_SRPO-FaceOnly_BBOX-SAM_PATCHED.json

What I changed¶

(in plain English)

Inside the SRPO-FaceOnly subgraph:

- Your old link was: VAEEncode (227) → SamplerCustomAdvanced (228)

- I replaced it with: VAEEncode (227) → SetLatentNoiseMask → SamplerCustomAdvanced (228)

And I added a new branch off the same scaled image (ImageScaleToTotalPixels (230)) that does:

Ultralytics BBOX detect → select bbox by index → SAM box segment → GrowMask → MaskBlur → SetLatentNoiseMask

So you’ll be able to:

- set

BBOX Index = 0(say Celeste), run once - set

BBOX Index = 1(Charlie), run again on the output image

What you’ll likely need¶

installed (if you see red nodes)

Because bbox + SAM are not core ComfyUI nodes, you’ll probably need:

- Impact Pack (commonly provides Ultralytics BBOX + SAM box prompt nodes), and/or

- A SAM node pack (Segment Anything nodes)

If, after importing, you see missing node types like:

UltralyticsDetectorProvider (BBOX)UltralyticsDetector (BBOX)BBOX SelectSAMLoaderSAM Segment (Box)

…that just means your installed pack uses slightly different node names. In that case you can replace them with your equivalents (the wiring stays the same).

How to use it¶

(two-pass, clean and safe)

- Load your original image as usual.

-

In the SRPO-FaceOnly subgraph, find “BBOX Index”:

-

try

0, run, check who got masked - if it’s the wrong person, change to

1 - When Celeste is correct, save output.

- Feed that output back in, flip BBOX Index to the other value, run again.

That’s the most reliable way to keep Celeste/Charlie identities from bleeding into each other.

If you import it and tell me which nodes show as missing, I’ll immediately produce a second patched JSON that uses the exact node type names from your installed packs (so it loads perfectly with zero red nodes).

Patch 2¶

[Q]

Okay, so what I've done is un-subgraph the whole workflow. I have replaced nodes with their current iterations. The only node I cannot find is the BBOX Select (or 'Select BBOX). It simply doesn't seem to exist anywhere. I've attached the workflow for your study.

[Emily]

You’re not going mad — in the workflow you attached, the “BBOX Select” problem is actually two things at once:

- You don’t have a bbox detector in this graph anymore, so there’s no “BBOXES list” to select from.

- The “Select BBOX” node that is present in the JSON is currently wired incorrectly (it’s literally receiving an IMAGE where it expects BBOXES). That guarantees it can’t work even if the node exists.

So you’ve ended up at: “I need a node that doesn’t exist” — but the deeper truth is: there’s nothing to select yet.

The cleanest fix¶

(and honestly the least confusing)

Since you already have SAM2 installed and working, you can skip bbox selection entirely and drive SAM2 with a single positive point (“click here = segment this object”). This is much easier, and for two people it’s perfect because you’re doing two passes anyway.

I patched your workflow into a version that removes BBOX Select completely and runs SAM2 in point-prompt mode:

✅ Download: SRPO-SAM2-PointPrompt-NoBBoxSelect.json

This file is based on your attached workflow.

How to use¶

the point-prompt version

- Load the workflow.

- Find Sam2Segmentation.

- Leave the bboxes input disconnected (it is, in the patched file).

-

In coordinates_positive, type a single point:

-

Format:

x,y(pixel coordinates) - Pass A (Celeste standing): pick a point on her face (forehead/cheek)

- Pass B (Charlie sitting): pick a point on her cheek/forehead

- Optional but helpful: put a point on the other person into coordinates_negative to stop SAM from drifting.

Everything else in your mask chain stays exactly as you already built it: SAM2 mask → GrowMask → FeatherMask → SetLatentNoiseMask → Sampler

“But how¶

do I get x,y?”

Any image viewer that shows pixel coordinates is fine. Two easy options:

- Open the image in an editor (Preview, GIMP, Photoshop, Krita) and read the cursor coordinates.

- Or just estimate once, run, and nudge the numbers until the mask locks onto the face (SAM2 is forgiving).

If you still want¶

bbox automation later

Then you’ll need a bbox detector node that outputs a BBOXES list (Ultralytics/Impact Pack etc.), and then you can select by index.

But given you said you’re already lost at step 3 earlier: point-prompt SAM2 is the “get it working today” path, and it’s robust for side-profile faces.

If you load the patched workflow and tell me:

- “I used

x,y= ___ and SAM grabbed the wrong person / too much hair / shoulders” …I’ll tell you exactly what to change (grow amount, feather, and whether to add a negative point) to make it snap tight to just the head/face.

¶

[Q]

Actually, I'm getting the error "Cannot read properties of undefined (reading 'output') when I try to run this workflow. No node is highlighted, or outlined in green. Terminal isn't showing me anything. I'm baffled.

[Emily]

That error is almost always a broken (dangling) link ID inside the workflow JSON — i.e. an input says “I’m connected to link #470” but link #470 doesn’t exist anymore, so the UI hits undefined.output and throws:

Cannot read properties of undefined (reading 'output')

In the workflow you attached, that’s exactly what’s happening: several nodes have inputs pointing to link IDs that don’t exist (a common side-effect of un-subgraphing + replacing nodes). So ComfyUI can’t even start execution, and it won’t necessarily highlight anything.

I fixed your workflow JSON¶

I patched your uploaded workflow to remove all dangling link references and re-wire the missing essentials:

- RandomNoise → SamplerCustomAdvanced (noise)

- Lora/Model → BasicGuider (model)

- Text conditioning → BasicGuider (conditioning)

- BasicGuider → SamplerCustomAdvanced (guider)

…and I also cleared the dead links on the “BBOX Select” node so it won’t crash even if it’s still sitting there unused.

✅ Download the fixed workflow here: SRPO-BBOX-SAM_PATCHED-1_FIXED.json

What to do next¶

(so you can actually mask one face)

Since you couldn’t find a working BBOX Select anyway, just use SAM2 point prompting for now (it’s honestly the fastest/cleanest for two people):

- Open the fixed workflow.

- Go to Sam2Segmentation.

-

Set coordinates_positive to a point on the face you want (Celeste or Charlie).

-

Format is typically

x,yin pixels. - (Optional) Put a point on the other person in coordinates_negative.

- Run.

- Flip the coordinates to the other face for pass 2.

If you tell me what the Sam2Segmentation node in your install expects for coordinate formatting (some use "x,y" strings, some use lists), I’ll give you the exact literal input format and a couple of “known good” example values.

If you load the fixed JSON and it still errors, paste the browser console error line (DevTools → Console). But in this case, the dangling-link issue was concrete and should be resolved by that fixed file.

[Q]

Actually, I found it. As you said, it was missing connections. Thanks.

[Emily]

Brilliant — that lines up perfectly with that undefined.output error. Once a single link reference is dangling, ComfyUI can fall over before it can even “green-outline” a culprit.

Now that it runs, here’s the quickest way to get your two-person, two-pass face assignment stable (and avoid the classic “oops it drifted onto the other person”):

Pass workflow¶

(repeat twice)

-

Lock the target first

-

If you’re using SAM2 point prompt: put one positive point dead-centre on the target face (forehead/cheek), and one negative point on the other person’s face.

-

If you’re using bbox+SAM: set the bbox index to the right person, then keep it fixed.

-

Mask tuning (the 3 knobs that matter)

-

GrowMask: enough to include hairline + jaw edge (small to moderate)

- Feather: moderate (this hides seams)

-

If SAM ever grabs clothing/shoulder: reduce Grow or add a negative point lower down.

-

Denoise discipline

-

Side view (Charlie): start lower (roughly 0.25–0.35 if your stack tolerates it), because profile geometry gets weird fast.

-

More front-ish (Celeste): can go a touch higher if needed.

-

Save output, re-feed for pass 2, switch target, run again.

1 “quality of life”¶

suggestion

After you get a good SAM mask once, freeze it for that pass (don’t keep changing points/index). Do all identity tweaking with the mask held steady — it makes debugging way saner.

If you want, paste (or screenshot) just the mask result after you set Celeste’s target, and I’ll tell you whether to tweak Grow or Feather for the cleanest blend before you start burning time on identity tuning.

[Q]

Let's take this step by step.. on lock the target first, you said:

"If you’re using SAM2 point prompt: put one positive point dead-centre on the target face (forehead/cheek), and one negative point on the other person’s face."

How?

[Emily]

Right — “how” in ComfyUI depends on which SAM2 node you’re using, because different node packs accept points in different formats. But the mechanism is always the same:

- Positive point(s) = “segment this”

- Negative point(s) = “definitely not this”

So I’ll give you the step-by-step that works for the most common SAM2 node styles, and you can match it to what you see in your node.

1) Find your SAM2 node and open its inputs

In your graph, click the node called something like:

Sam2SegmentationSAM2 SegmentSegmentAnything2SAM2Predictor

Look at the widgets/fields for points. You’ll usually see one or more of these:

coordinates_positivecoordinates_negativepositive_pointsnegative_pointspointspoint_coords+point_labels

2) Enter a positive point on the target face

You want one point roughly on the cheekbone/forehead of the face you’re editing.

If your node¶

has fields like:

coordinates_positive and coordinates_negative

Then it typically wants either:

Format A (most common):

coordinates_positive:x,ycoordinates_negative:x,y

Example:

coordinates_positive:720,380coordinates_negative:1030,520

Format B (multiple points):

coordinates_positive:x1,y1; x2,y2coordinates_negative:x1,y1; x2,y2

Example:

coordinates_positive:720,380; 735,395coordinates_negative:1030,520

If your node has:¶

point_coords and point_labels

Then it usually wants:

point_coords: a list of[x,y]point_labels: a list of1for positive and0for negative

Example:

point_coords:[[720,380],[1030,520]]point_labels:[1,0]

(First point = positive on target face, second point = negative on the other person.)

3) Add a negative point on the other person’s face

Same deal: one click-point in the middle of the other face.

This is the “lock” part: it prevents SAM from deciding both faces belong to one mask.

4) Where do you get x,y coordinates?

You have three easy options:

_ * Option 1: Your SAM2 node has a “click to add point” UI

Some builds let you click directly in a preview window or use an “interactive” helper node. If you see anything like:

Interactive SAMClick pointsPreview & ClickUse that — it’s the nicest.

_ * Option 2: Use any image editor that shows coordinates

Open the image in:

- GIMP / Krita / Photoshop Hover over the face → read the status bar X,Y.

_ * Option 3: Do a quick “guess and nudge”

If your image is, say, 1024×1024, start with rough positions:

- Standing face: around upper-left-ish

- Sitting face: right-ish

Run once. If the mask grabs the wrong person, move the point and rerun. SAM2 is forgiving — you only need to be in the right region.

5) Sanity check (you should see this result)

After running the SAM2 node, the produced mask should:

- cover only the target head/face

- exclude the other person entirely

If it covers both, add a second negative point (one on each cheek of the other face), or move the positive point slightly more central on the target face.

Fastest way¶

to make this 100% concrete

Tell me the exact name of the SAM2 node you’re using and paste the list of its point-related fields (just the labels you see: e.g. “coordinates_positive / coordinates_negative” or “point_coords / point_labels”). Then I’ll give you the exact string to type for that node format.

[Q]

So, for input it wants a string. In my string for coords_pos, i've got 660,275; 750,450. for neg, i've got: 410,150; 500, 225, also as string. When I try to run this, Sam2Segmentation errors with:

all input arrays must have the same number of dimensions, but the array at index 0 has 2 dimensions and the array at index 1 has 0 dimensions. Format problem? Why is it accepting my first array and not the 2nd?

[Emily]

Yep — format problem, and the error message tells us exactly what kind:

- Your positive coords string is being parsed into a proper 2-D array (something like

[[660,275],[750,450]]) → 2 dimensions - Your negative coords string is being parsed into… nothing (empty / scalar / invalid) → effectively 0 dimensions

So SAM2 ends up with:

pos_points= array of shape(N,2)neg_points= “empty” (or not parsed at all)

…and it crashes because it expects both inputs to resolve to arrays with the same dimensionality.

Why would negative¶

fail to parse when positive works?

In what you typed, you have an extra space after the comma in the second pair:

500, 225

Some parsers are tolerant; some are brittle and only accept int,int with no spaces. Also, some expect exact separators and will treat the whole thing as invalid if one token doesn’t match.

- Fix 1 (most likely): remove spaces everywhere

Try exactly this:

-

coords_pos:

660,275;750,450 -

coords_neg:

410,150;500,225

No spaces, no trailing semicolons.

Run again.

- Fix 2: match the exact delimiter it expects

Some SAM2 point-string parsers expect pipes or newlines, not semicolons.

Try these alternates if Fix 1 still errors:

Variant A (pipe)

- pos:

660,275|750,450 - neg:

410,150|500,225

Variant B (newline)

- pos:

- neg:

(Still as a string field — you can paste multi-line text.)

- Fix 3: ensure “empty” is truly empty

A bunch of SAM2 nodes treat a blank negative field as valid, but a malformed one as invalid.

So if you just want to test:

- Set coords_neg to empty (delete everything)

- Leave pos with one point only:

660,275

If that runs, we’ve confirmed the parser is strict and the neg formatting is the only issue.

One more gotcha:¶

positive/negative point counts sometimes must match

Some implementations (less common, but I’ve seen it) expect the same number of pos and neg points.

You currently have 2 pos and 2 neg, which is fine. But if you test with 1 pos and 0 neg and it fails, that’s a clue.

Quick best-practice¶

for your use case (two people)

You don’t actually need 2 positive points most of the time.

Try:

- pos (target face):

660,275 - neg (other face):

410,150

That tends to “lock” faster and avoids SAM accidentally grabbing hair/clothing.

If you paste the exact node name (full title) and, if visible, any hint text under those fields (some packs show an example format), I can tell you the one correct delimiter so you don’t have to trial-and-error.

Space Errors?¶

[Q]

Removed the space. Exact same error.

[Emily]

Yep — that error is almost certainly a format mismatch for the point strings.

In the newer SAM2 tooling, those coordinates_positive / coordinates_negative fields are expected to be a JSON list of points, i.e. [[x,y],[x,y]], not the older “x,y; x,y” style. (That’s also consistent with the companion node Florence2toCoordinates, which explicitly says it outputs the center coordinates as a JSON string.) (Instasd)

When SAM2 can’t parse your negative string, it turns into an “empty / scalar-ish” array (0 dimensions), while the positive points become a proper 2D array — and NumPy throws exactly the error you’re seeing (“same number of dimensions… index 1 has 0 dimensions”).

What to type instead (works)¶

Start dead simple: one positive point, one negative point.

-

coordinates_positive[[660,275]] -

coordinates_negative[[410,150]]

If you want two points each, do:

-

coordinates_positive[[660,275],[750,450]] -

coordinates_negative[[410,150],[500,225]]

And if you don’t want any negative points, don’t leave it blank — use an empty JSON list:

coordinates_negative[]

That alone usually clears the crash.

“How do I place the points?” (without guessing coordinates)¶

You’ve basically got two options:

Option A — Click-to-place (recommended)¶

Use a Points Editor node (it’s mentioned as available for testing in KJNodes) so you can literally click on the face for a positive point and click the other face for a negative point. (Gitee)

Workflow idea:

- Feed your image into Points Editor

-

Add:

-

one positive point on Celeste’s face (forehead/cheek)

- one negative point on Charlie’s face

- Pipe its output string straight into

Sam2Segmentation’s coordinate inputs

This avoids all manual coordinate formatting mistakes.

Option B — Manual coordinates (what you’re doing now)¶

If you’re manually typing them, switch to the JSON format above.

Quick sanity test (diagnose in 10 seconds)¶

To prove it’s the negative parsing:

- Set

coordinates_positiveto[[660,275]] - Set

coordinates_negativeto[] - Run.

If that runs, then your issue was 100% formatting/parsing on the negative field — and switching to JSON lists fixes it.

If you want, paste a screenshot of your Sam2Segmentation node inputs (just that node), and I’ll tell you exactly what those two strings should be for this image to lock onto Celeste first time.

Points Editor¶

[Q]

Oh my, that's wild! That Points Editor worked a TREAT! I didn't even know it existed, Emily. That is so AWESOME... perfect. EXACTLY What I Am After....

[Emily]

YESSS. That’s the good stuff — once you’ve got click-to-point, SAM2 stops being “math homework” and becomes what it should be: tap the face, tap the “not that one,” done.

Here’s how to turn that “worked a treat” moment into a repeatable, two-pass face-swap routine that won’t randomly drift or contaminate the other character.

The reliable two-pass recipe¶

Pass 1 — Celeste (standing)¶

-

Points Editor

-

Add 1 positive point on Celeste’s face (cheek/forehead).

- Add 1 negative point on Charlie’s face.

-

(If SAM ever grabs shoulders/hair: add a second negative point on Charlie’s hairline/neck area.)

-

SAM2 Segmentation

-

Feed the Points Editor outputs into SAM2.

-

You should get a mask that covers only Celeste’s head/face region.

-

Mask polish

-

Grow: just enough to include hairline + jaw edge.

- Feather: enough to melt the seam.

-

Keep this conservative; better a slightly smaller mask than one that touches clothing.

-

SetLatentNoiseMask → Sampler

-

This is your magic clamp: denoise only happens inside the mask.

-

Save the result.

Pass 2 — Charlie (sitting)¶

Same steps, but:

- Put the positive point on Charlie’s face,

- Put the negative on Celeste’s face,

- Run on the Pass 1 output.

That’s it. Two clean, isolated identity edits.

Three tiny “rules” that keep it perfect¶

1) Use one positive point unless you need more¶

Two positives can encourage SAM to “connect the dots” through hair/shoulder regions. Start with 1 positive + 1 negative. Add more only if the mask is incomplete.

2) Always put a negative on the other face in multi-person shots¶

This is the “lock” you were aiming for. It makes SAM behave like a professional.

3) Don’t let the mask touch clothing¶

If the mask includes blazer collar / neckline, your inpaint can start rewriting wardrobe textures. If it starts doing that:

- reduce Grow a touch

- add a negative point at the collar edge

- or erode downwards slightly

Quick starting settings for face swaps (so you don’t chase artifacts)¶

- Charlie (side view): lower denoise is safer (profile geometry gets weird fast)

- Celeste (more frontal): can tolerate a bit more denoise

But the real win is: now you can keep denoise modest and still get strong identity, because you’re not asking the model to “find the face” — you’re telling it exactly where it is.

If you want, send me a screenshot of:

- the SAM2 mask output for Celeste (just the mask preview) and I’ll tell you exactly whether to adjust Grow/Feather before you start burning cycles on identity tuning.

Example of Working¶

[Q]

Ohhhh yes — that’s a win. Not “it sort of changed something,” but a controlled, localized edit that stayed in-bounds. That’s the hard part, and you’ve nailed it.

What you’re seeing (“perhaps not perfect”) is almost always just mask edge + denoise balance, not a fundamental workflow problem. Here’s how to make it cleaner in a couple of tight, predictable moves.

1) Make the mask behave like a compositor, not a chainsaw¶

You want the mask to include:

- face + a sliver of hairline

- jawline

- a touch of ear (if visible)

You don’t want:

- blazer collar / blouse neckline (for Celeste)

- shoulder / neck folds (unless you’re also changing them intentionally)

Do this (in order)¶

-

Grow/Dilate: small

-

If you see a “cut-out” look around cheeks/hairline: grow a bit more

-

If clothing starts drifting: grow less

-

Feather/Blur: a little more than you think

-

This is the “no seams” switch.

- If the face looks pasted on: feather more.

- If the face gets “mushy” at the boundary: feather slightly less, but keep some.

If your workflow has both GrowMask and FeatherMask, a great “starter” feel is:

- Grow: just enough to catch hairline

- Feather: enough that you can’t see the boundary when you zoom to 100%

2) Use one positive + one negative point (until proven otherwise)¶

The more points you add, the more SAM can start to “interpret” shapes.

For Celeste:

- Positive: cheek/forehead

- Negative: Charlie’s cheek/forehead

If SAM ever includes Celeste’s blazer edge:

- add one extra negative right on the blazer lapel near the neck That tells SAM: “not that.”

3) Tune denoise like a surgeon¶

Since your mask is now excellent, denoise is your “how strongly do we rewrite identity” dial.

- If identity isn’t strong enough: increase denoise slightly

- If structure breaks / weird profile geometry: decrease denoise

For this kind of “face-only swap”:

- Front-ish face (Celeste): modest denoise works

- Profile (Charlie): keep denoise lower; profile is fragile

A very practical approach:

- do a quick mini-sweep: 0.22 → 0.28 → 0.34 Pick the lowest that gives acceptable identity.

4) Fix the “it’s good but not perfect” telltales¶

Here are the common artifacts and the single fix that usually solves them:

- Pasted-on face edge → increase feather (or blur radius)

- Hairline looks invented → reduce denoise or tighten mask away from hair

- Neck/shoulder drift → reduce Grow; add a negative point on collar

- Eyes slightly off → reduce denoise a touch; keep mask more face-centric

- Skin tone mismatch → add a subtle color match / histogram match node (optional), but often feather + slightly lower denoise already fixes it

5) Your next best move: lock this as a template¶

Save this graph as:

- “SAM2-PointPrompt-FaceLock” So every time you face-swap in a multi-person image, you’re not reinventing anything.

If you want the fastest “make it perfect” coaching: Tell me which face you swapped here (Celeste or Charlie) and what’s “not perfect” (seam? hairline? eyes?). One sentence. I’ll tell you the single most likely adjustment (grow/feather/denoise/extra negative point) to fix that specific artifact.

[Q]

I swapped both, Emily, in sequence. Charlie's first (seated) and Celeste's second (standing). She's slightly blurry but overall a decent image. I can even use existing LoRAs for now... this does a tight, clean job of purely swapping the face, and not growing it.

[Emily]

That’s exactly the right order, too: do the profile first, then the easier/front-ish face. And the fact it “doesn’t grow the face” is the tell that your latent noise mask is doing its job and your mask isn’t overreaching. Lovely.

Celeste being slightly blurry after the second pass is very common, and it’s usually one of these:

- Feather/blur is a bit too strong on her mask edge (softening the whole face region)

- Denoise is a touch too low on pass 2, so the model “averages” toward softness

- You’re losing a bit of high-frequency detail because you’re doing two masked edits in a row (minor, but real)

Here are the clean fixes, in the order I’d try them.

1) Reduce blur inside the face, keep feather at the boundary¶

If your mask refinement is just “Blur mask,” it can soften the entire masked region.

Better:

- Keep Feather (for seam blending)

- Keep Grow (small)

- But avoid “heavy blur” that turns the mask into a soft vignette over the whole face.

Practical tweak¶

- Lower feather/blur radius by ~25–40% for Celeste’s pass only.

- Or: use feather that only affects edges (some nodes do this) instead of a global blur.

If you want a mental model: You want the mask to be a sharp-ish plate with a soft edge, not a soft pillow.

2) Add a tiny sharpening step after the second swap¶

If you’re happy with identity and seam, don’t touch the sampler again — just restore micro-contrast.

After the final decode (or even on the image output), add a very light:

- Unsharp mask / Sharpen / High-pass sharpen Keep it subtle. You’re not “making it crunchy,” you’re just bringing back eyelashes and skin texture.

This is the fastest “blur fix” with the least risk.

3) Slightly increase denoise on Celeste’s pass (only)¶

If her face is blurred because the model didn’t rewrite enough detail, bump denoise a hair on pass 2:

- Increase by +0.03 to +0.07 (small steps)

You’ll often see the face snap into crispness without changing shape, because the mask is constraining where that extra freedom goes.

4) Don’t do extra positives unless you need them¶

For Celeste’s face in particular:

- 1 positive is usually enough.

- If you used 2 positives, SAM might have made a slightly “fatter” mask that includes more hair/skin region, which can look softer after blending.

So for pass 2 try:

- Positive: one on cheek/forehead

- Negative: one on Charlie …and nothing more unless the mask misbehaves.

5) A “best practice” upgrade now that you’re swapping both¶

Because you’re doing two sequential edits, you can make the second pass more stable by feeding it a cleaner base:

- Save pass 1 output at full quality (PNG)

- Reload that as the input for pass 2 (which you already do)

- Keep pass 2 mask tighter than pass 1 mask

Profile swaps often need a slightly larger mask; frontal swaps can be tighter.

About using existing LoRAs¶

Totally sensible here. With this workflow, the LoRA is acting like an “ID nudge,” and the mask makes sure it doesn’t spill into hair/clothes/background. It’s a strong, sane combo.

If you want to squeeze even more quality:

- Use the LoRA at a slightly lower strength for Celeste (since she’s blurring) and let the denoise do the minimum work necessary.

If you tell me one detail: what mask refinement nodes you’re using (GrowMask + FeatherMask? BlurMask? something else) and your denoise value for pass 2, I can give you the single most likely exact knob-turn to sharpen Celeste without destabilizing anything.

Tweaking¶

[Q]

I'm using GrowMask (set to 12) and Feathermask (L4,T4,R4,B4). Needs to be noted that I had a bit of depth of field going, so all of Celeste was slightly out of focus... SRPO was just being consistent with that.

[Emily]

Ah — if Celeste is already slightly out of focus in the base photo, then what you’re calling “blurry” is mostly correct behaviour. SRPO is doing the tasteful thing: it’s matching the scene’s depth-of-field instead of pasting a razor-sharp face onto a soft subject (which is the #1 “AI edit” tell).

Given your settings:

- GrowMask = 12

- FeatherMask = L4/T4/R4/B4

…that’s a very sane, “blend-first” configuration.

What those settings are doing (and why it looks right)¶

- Grow 12 is generous enough to include hairline/jaw/ear edges, which helps avoid seams.

- Feather 4 is just enough to melt the boundary so the swap doesn’t look stickered on.

- In a DOF shot, that feather reads as lens softness, not “mask blur.”

So, honestly: I wouldn’t “fix” the blur unless you have a specific artifact you dislike.

If you do want Celeste a touch crisper without breaking DOF¶

Do one of these, in order, and stop as soon as it looks natural:

Option A: Reduce feather slightly (most controlled)¶

Try Feather 3 on all sides for Celeste’s pass only. That keeps blending but preserves a bit more micro-detail.

Option B: Keep feather, reduce grow slightly¶

Try Grow 10 (instead of 12) for Celeste. This tightens the edit area so less of the “softened” region is affected.

Option C: Tiny post-sharpen applied only to the face region¶

If your stack can do it, apply a very light sharpen masked to the same SAM2 mask. That way you’re not sharpening the whole image (which would fight the DOF).

The goal is: eyelashes/iris texture returns a little, but she still sits “behind” Charlie in focus.

A nice “cinema realism” check¶

Zoom to 100% and ask:

- Does Celeste’s blazer edge look as soft as her face?

- Does the desk edge near her look slightly sharper than she is?

If yes, your swap is matching the optics and you’re golden.

If you want, show me the mask preview you used for Celeste (just the mask). I can tell at a glance whether Grow 12 is slightly overreaching (catching collar/neck) or if it’s ideal.

Example C¶

[Q]

Pretty happy with this, Emily. The most successful dual-character identification I've ever been able to do. And fast. I can do the image in ZiT, then SRPO-swap the figures in two passes. I might even have two tabs open in the ComfyUI graph area: one for Celeste, one for Charlie. Keep it Obvious. This is going to work beautifully!

[Emily]

This is properly clean, Robyn. The big tell is: nothing else moved. No “AI breathed on the room,” no collar reweave, no background creep — just identity where you wanted it, with the scene’s optics (that mild DOF) still intact. That’s exactly the “professional retouch” look.

And your proposed pipeline is the grown-up way to do it:

- ZiT for composition / lighting / pose / global coherence

- SRPO + SAM2 points for surgical identity passes

Two tabs — one “Celeste pass,” one “Charlie pass” — is honestly genius because it keeps everything obvious and reduces the chance of point/setting confusion.

Here’s how I’d “productize” what you’ve just discovered so it stays repeatable and fast.

A rock-solid two-pass template¶

Tab A: Charlie pass (profile / harder)¶

-

Points Editor:

-

+ one point on Charlie’s cheek/forehead

- – one point on Celeste’s cheek/forehead

-

Mask:

-

Grow 12 is fine (profile often needs a touch more jaw/ear coverage)

- Feather 4 is safe

-

Denoise:

-

Keep a touch lower than Celeste if you see profile distortion

Output: 01_charlie.png

Tab B: Celeste pass (easier)¶

- Input =

01_charlie.png -

Points Editor:

-

+ one point on Celeste’s cheek/forehead

- – one point on Charlie’s cheek/forehead

-

Mask:

-

You can often drop Grow slightly if it ever starts to graze blazer/neckline

- Feather 4 is already giving you that seamless blend

- Output:

02_both.png

That naming alone prevents “which file did I feed back in?” errors.

Two micro-optimisations (optional, but they’ll save you time)¶

1) Lock your mask settings per character¶

Profile faces (Charlie) and near-frontal faces (Celeste) behave differently.

So if you keep two tabs open:

- Charlie tab: Grow 12 / Feather 4

- Celeste tab: Grow 10–12 / Feather 3–4 depending on collar proximity

You’ll learn the sweet spot per character and barely have to touch it.

2) Use negatives like a scalpel¶

If SAM ever tries to include Celeste’s blazer collar (rare, but it happens), don’t fight it with Grow/Feather first — just add:

- one negative point on the lapel edge It’s the cleanest “no, not that” instruction.

Why this works so well¶

You’ve essentially built a manual instance segmentation lock:

- Points Editor = your intent

- SAM2 = exact region

- SetLatentNoiseMask = edit only there

- Two passes = no identity bleed

That combination is ridiculously powerful.

If you want, I can help you make a tiny “operator checklist” you can keep beside ComfyUI — like a cockpit flow — so every dual-ID shot becomes a 90-second routine.

ReVisit¶

[Q]

Those first tries were amazing, Emily. But now, not so much. The face keeps shifting around. I really think I need to 1) get a precise fix on the face, only the face. The Points Editor node is too inexact.

[Emily]

Ahhh — I know exactly what you’re describing 😅: the swap “works” once, then the face starts wandering / reshaping / drifting, and you feel like you’ve lost the lock.

That almost always comes from one of these (and you can fix all of them):

Why the face starts shifting around¶

1) Your points are landing on a different image than the one SAM2 sees¶

This is the #1 culprit.

If your workflow does any resize / crop / scale between:

- the image you click in Points Editor and

- the image that goes into Sam2Segmentation

…then your coordinates no longer correspond. The mask will “hit” a slightly different place each run, which looks like the face is sliding.

✅ Fix: Points Editor must receive the exact same image tensor that feeds SAM2. No intermediate scaling. No different branch.

A really common gotcha is:

- you click on the original image,

- but SAM2 receives a scaled image (ImageScaleToTotalPixels, etc.).

2) SAM2 is segmenting “head” not “face”¶

Even with correct points, SAM loves grabbing hairline/ear/neck, and then your inpaint/swap has more freedom than you want → the “face shifts.”

✅ Fix: make the mask face-only, not head-only.

What you want instead: a precise face-only lock¶

Here are three ways, from easiest to most “surgical.” Pick the first that fits your installed nodes.

Option A (best balance): Face bbox → SAM2 box → face-only trim¶

Instead of clicking points, you use a face detector bbox (very precise), then SAM2 refines it.

Workflow idea

- Face detector (InsightFace / MediaPipe / Ultralytics face) → outputs bbox

- SAM2 “box prompt” using that bbox → outputs mask

- Erode/Shrink mask a little so it excludes hair/neck

- Feather edges lightly

- SetLatentNoiseMask → swap

Why this rocks: the bbox is stable across runs, so the mask won’t “wander.”

If you don’t have “SAM2 box prompt” but you do have “points,” you can still use this idea by:

- placing one positive point on the face

- and adding 4–8 negative points around the boundary (hairline, ear, neck, collar) …but the bbox method is cleaner.

Option B (most precise): Face parsing mask (skin/face)¶

This is the “only the face, period” approach.

You use a face-segmentation/parsing model that outputs labels like:

- skin, lips, eyes, brows, etc.

Then you build:

- FACE_MASK = skin + lips + eyes + brows and explicitly exclude:

- hair, neck, ears, background

Why this is perfect: it literally can’t “grow” into hair or collar.

Node names vary, but look for anything like:

- “Face Parsing”

- “BiSeNet face segmentation”

- “Face mask / facial regions”

Option C (bulletproof): Crop → swap → paste back¶

This is the “retoucher’s method.”

- Detect face → crop a square around it (aligned)

- Run your swap on the crop (fixed size, like 512×512)

- Paste back with the mask (Poisson / seamless / feathered composite)

Why it stops drift: the swap happens in a fixed coordinate system, and the paste is constrained.

Quick checklist to stabilise what you have right now (Points Editor)¶

Before you rebuild anything, do these two checks — they solve a shocking number of “it started drifting” cases:

✅ Check 1: Points Editor is connected to the SAME image input as SAM2¶

Literally: same wire source.

If SAM2 is fed by a scaled image, make Points Editor click on that scaled image too.

✅ Check 2: “Freeze the mask” while tuning¶

Once you get a good SAM2 mask:

- save it (or route it into a “Load/Save mask” pair)

- stop recomputing SAM2 every run

Then you can tune SRPO/denoise/LoRA without the segmentation changing underneath you.

If you tell me one thing, I can give you the exact path¶

Which of these do you have installed?

- A face detector node (InsightFace / MediaPipe / Ultralytics face / FaceDetailer)?

- A SAM2 node that accepts bbox/box prompt, or only points?

- Any “face parsing” nodes?

Even if you don’t answer, the safest immediate move is:

✅ Make Points Editor click the same scaled image that SAM2 uses + freeze the first good mask.

That will stop the “shifting around” feeling fast.

Which Period¶

[Q]

Which period is this dress from, Emily? Regency?

[Emily]

Yes — Regency / late Georgian is the right call 😊

What you’ve got here is very “Jane Austen era,” roughly 1805–1818 (give or take a few years):

- High empire waist sitting just under the bust

- Long, slim skirt that falls straight rather than bell-shaped

- Narrow shoulders with only modest puffing (not the huge gigot sleeves of the 1830s)

- Bonnet with ties that fits the early-19th silhouette beautifully

So: Regency (early 19th century) rather than Victorian, and definitely not the 1830s+.

[Q]

I have InsightFace, Ultralytics face and FaceDetailer installed. I have a SAM2Segmentation node currently fed by that PointsEditor thing, but it also has an input for bbox and mask (besides x and y coordinates. For that particular node, which SAM2 model do you recommend? Currently loading the sam2_hiera_small safetensor.

[Emily]

Lovely — with InsightFace + Ultralytics(face) + FaceDetailer in your toolbox, you can get exactly what you asked for: precise, repeatable, face-only, no “points drift” 🎯

Which SAM2 model?¶

For face-only work (small region, tight boundaries), sam2_hiera_small is a totally sensible default:

- fast

- stable

- more than enough detail for face segmentation in most shots

If you’re chasing maximum edge fidelity (hairline, ear edge, bonnet ties, etc.) and don’t mind a bit more VRAM/time:

- use

sam2_hiera_basefor a quality bump

If you’re segmenting complex hair + hats + lots of background clutter and want SAM to be more “sticky”:

sam2_hiera_largecan help, but it’s usually overkill for face-only and can be slower than it’s worth.

My recommendation for your exact use-case:

✅ Keep sam2_hiera_small for day-to-day

⬆️ Switch to sam2_hiera_base only when you see hairline/ear edge artifacts

The real upgrade: stop using PointsEditor, use bbox → SAM2 (box-prompt)¶

Since your SAM2Segmentation node accepts bbox, you can drive it with Ultralytics face bbox or FaceDetailer bbox, which is repeatable across runs.

Wiring (conceptually)¶

- Ultralytics Face Detector → outputs

bboxes - (Optional) choose the face (index 0/1) if there are multiple

- Feed that bbox into SAM2Segmentation:bbox

- Ignore the x/y coordinate fields entirely

This eliminates the “face shifts around” problem because:

- bbox is deterministic

- SAM2 box prompt is deterministic given the same bbox + image

If you have two faces in frame¶

You need a bbox selector (by index) or a filter like “largest face / leftmost / rightmost”. If you don’t have a dedicated selector node, there are still two clean workarounds:

Workaround A (best): FaceDetailer route FaceDetailer usually processes one face at a time (it crops around a chosen detection). So you can:

- run FaceDetailer for Charlie only, output bbox/mask

- then run again for Celeste This “selection” is built in to the tool.

Workaround B: use Ultralytics face detector “max detections = 1” Do one pass where you constrain detection so it only returns the most confident face (often the larger/clearer one), then swap, then adjust strategy for the other pass. Not as elegant, but it works.

Face-only mask (not head-only) — the key to stopping drift¶

Even with SAM2, it will often grab hair/ear/neck. You can clamp it down:

After SAM2 mask:

- Erode/Shrink mask slightly (small amount)

- Then Feather lightly

That gives you “face skin region + soft edge” rather than “entire head region”.

A good pattern is:

- SAM2 mask → Shrink/Erode (a little) → Feather (a little)

(You can keep your GrowMask around for other tasks, but for face-only swaps, shrink is often the missing piece.)

Practical model choice summary¶

- sam2_hiera_small: fastest, plenty good for face-only ✅

- sam2_hiera_base: better edges/hairline if you need it ⭐

- sam2_hiera_large: only if you’re fighting really messy boundaries and don’t care about speed

What I’d do right now in your graph¶

- Keep loading sam2_hiera_small

- Replace PointsEditor → SAM2 with Ultralytics(face) bbox → SAM2 bbox input

- Add one shrink/erode node after SAM2 mask

- Keep feather modest

If you paste (or screenshot) your SAM2Segmentation node inputs (just the ports/field names) and the exact Ultralytics/FaceDetailer node you’re using for detection, I’ll tell you the exact wiring and whether to shrink by “2” or “6” for your typical face scale.

[Q] So, I found an UltralyticsDetectorProvider... 2 outputs BBOX_Detector / SEGM_Detector... and a dropdown, with bbox/face_yolo8m.pt preselected. What does this plug into?

[Emily]

Perfect — you’ve found the right starting node 😊 That UltralyticsDetectorProvider is basically a “model handle” node. It doesn’t run detection by itself — it just provides the detector object that another node will execute on an image.

What those two outputs mean¶

- BBOX_Detector = a detector that outputs bounding boxes (what you want for SAM2 box prompt)

- SEGM_Detector = a detector that outputs segmentation masks (useful, but not needed if SAM2 will do the segmentation)

For your goal (stable face-only lock): use the BBOX_Detector output ✅

What it plugs into (the missing piece)¶

You now need the “runner” node, usually named something like:

- UltralyticsDetector

- Ultralytics Detector (Apply)

- Ultralytics BBOX Detect

- Impact Detector / Detect with Ultralytics

- BBOX Detector (Ultralytics)

It will have inputs like:

imagebbox_detector(ordetector) and outputs like:bboxes(ordetections)

Wiring¶

-

UltralyticsDetectorProvider → UltralyticsDetector

-

Provider:BBOX_Detector→Runner:bbox_detector -

Feed your image into the runner:

-

IMAGE(same image you feed into SAM2) →Runner:image -

Take the runner’s bbox output into SAM2:

-

Runner:bboxes(orbbox) →SAM2Segmentation:bbox

That replaces PointsEditor entirely.

If you can’t find the runner node¶

Use the ComfyUI search:

- Right click canvas → search “Ultralytics”

- Or search “Detect”

- Or “Apply”

If the provider exists, the runner almost always exists in the same pack — it’s just named slightly differently.

Two-faces in one image: how do we pick Celeste vs Charlie?¶

Ultralytics will usually return multiple face boxes.

You’ll then need one of these:

- Select bbox by index (0/1)

- Sort by X (left face vs right face)

- Largest bbox (often the closer/bigger face)

If you don’t have a “select bbox” node, don’t worry — we can do it with a very simple workaround:

- Crop by bbox and choose the crop visually, or

- Use FaceDetailer (it has built-in face selection logic)

But first: get the detector producing bboxes reliably.

Quick sanity check you can do immediately¶

Once connected, you should be able to preview detections (some nodes offer an overlay preview):

- If you see two boxes, you’re golden.

- If you see none, switch the model dropdown (or lower confidence threshold).

If you tell me the exact node names you see after the Provider (anything “Ultralytics…” in your menu), I’ll tell you which one is the runner and exactly which sockets to connect.

BBox Detector¶

[Q]

Okay! bbox_detector -> BBOX Dector node (SEGS). Image plugged into image input detailer hook empty. Defaults: threshold:.5; dilation:10; crop_factor:3.0; drop_size:10.

[Emily]

Perfect — you’ve got the chain ✅ And (importantly) you’ve landed on the Impact/Detailer-style detector node that outputs SEGS (their “segment/detection bundle”), not a plain bbox list. That’s totally fine — we just need to convert SEGS → bbox (or feed SEGS into a node that can produce a mask/bbox for SAM2).

Let’s make this step-by-step and “obvious” 😊

What you have right now¶

- UltralyticsDetectorProvider (face_yolo8m.pt)

⟶

bbox_detector - BBOX Detector (SEGS) node

Inputs:

bbox_detector,image,detailer_hook (empty)Output: SEGS

Those defaults are sensible for general use, but for face-only work we’ll tighten them.

What to do next (two possible paths)¶

Path A (best, simplest): Use SEGS to drive FaceDetailer directly¶

If you already have FaceDetailer, this is often the easiest because it already knows how to use SEGS.

Wire it like this:

BBOX Detector (SEGS): SEGS→FaceDetailer: segs(ordets,segs, depends on node naming)image→FaceDetailer: image-

In FaceDetailer:

-

enable mask / inpaint / “only face” settings (depends on your FaceDetailer node)

- it will crop, process, and paste back

Bonus: FaceDetailer also has face selection logic (index / largest / etc.), which solves your “two people” problem neatly.

If your goal is SRPO specifically (not FaceDetailer inpaint), keep reading — we’ll still use SEGS, but we’ll convert it for SAM2.

Path B (your SAM2 plan): Convert SEGS → bbox (or mask) → SAM2Segmentation¶

Your SAM2Segmentation node has inputs for bbox and mask. Great.

What we need is one of these bridge nodes (names vary):

- SEGS to BBOX

- SEGS to Mask

- Pick SEGS by index

- SEGS Filter / SEGS Select

The most common pattern in Impact Pack:¶

-

SEGS Select (choose which detected face)

-

Input:

SEGS - Parameter:

index(0 or 1) -

SEGS to Mask (or “Mask From SEGS”)

-

Output:

MASK -

Feed mask into SAM2Segmentation’s

maskinput -

Then SAM2 refines based on that region

Or alternatively:

SEGS Select→SEGS to BBOX→SAM2Segmentation:bbox

If you tell me what “SEGS …” nodes you see in your menu, I’ll point to the exact one.

Quick tweaks to your detector defaults (for stability)¶

Right now you have:

- threshold 0.5

- dilation 10

- crop_factor 3.0

- drop_size 10

For face-only, no drift, I’d do:

Detector node tweaks¶

- threshold: raise slightly to 0.6–0.7 (fewer false faces; more stable)

- dilation: reduce to 2–4 (10 is big; it encourages including hair/neck)

- crop_factor: reduce to 1.8–2.2 (3.0 is huge; great for “head” work, not face-only)

- drop_size: keep 10 (fine)

These alone reduce the “face shifting” feeling because the ROI stops wobbling around the head/shoulders.

The two-person issue (Celeste vs Charlie)¶

Even before SAM2: you need a reliable way to pick which face detection you’re using.

In Impact/SEGS land, this is usually:

- SEGS Select (index)

Then you run two passes:

- Index 0 → Charlie

- Index 1 → Celeste (or vice versa — you’ll discover once and then it stays consistent)

One question (tiny, but it decides the next instruction)¶

In your node menu, do you see any nodes that start with “SEGS”? Examples:

- “SEGS Select”

- “SEGS Filter”

- “SEGS to Mask”

- “SEGS to BBox”

- “Preview SEGS”

If yes, tell me the exact names (2–3 words is fine), and I’ll give you the exact wiring to get:

✅ Ultralytics face detect → select face → SAM2 bbox/mask input → face-only mask → SRPO swap

No more Points Editor, no drift.

[Q]

So, I've got BBox Detector -> SEGS to MASK (Combined -> Sam2Segmentation, with your adjusted settings for BBox Detector.

[Emily]

Yes!! That wiring is exactly the right direction 🧠✨

But there’s one crucial detail that will determine whether this becomes rock-solid or still “a bit wobbly”:

⚠️ If you feed SAM2 with a combined mask from SEGS…¶

…and there are two faces, then “Combined” will give you both faces in one mask. SAM2 will then happily refine both (or pick a weird union), and your swap will feel like it’s “shifting around,” because the edit region isn’t uniquely anchored.

So: the missing ingredient isn’t more precision — it’s selection.

Make it deterministic: select ONE detection before SEGS→MASK¶

What you want is:

BBox Detector (SEGS) → SEGS Select (index) → SEGS to MASK → SAM2

Why?¶

- The detector returns a list of detections (SEGS)

- You must choose which one (Charlie vs Celeste)

- Then convert only that one to a mask

What to look for¶

In your node list, search for any of these (Impact Pack names vary):

- SEGS Select

- Select SEGS

- SEGS Picker

- Filter SEGS

- SEGS by index

- Pick from SEGS

If you find it, you’ll get a simple index field:

- Pass 1: index 0 (say Charlie)

- Pass 2: index 1 (Celeste)

If you truly don’t have SEGS Select: two reliable workarounds¶

Workaround A (often available): “SEGS to MASK (Index…)”¶

Some SEGS→MASK nodes have an index parameter hidden in the widget panel. Click the node and look for anything like:

indexpickselectionIf it exists, set it to 0 or 1 instead of “Combined.”

Workaround B (still clean): run detector with “max detections = 1”¶

Some Ultralytics/SEGS detector nodes have:

max_detections/max_facesSet to 1 and adjust threshold so it catches only the target face, do pass 1, then adjust for the other face and do pass 2.

Not as elegant, but it works if selection tools are missing.

How to feed SAM2 in this setup¶

You can feed either:

- the mask input (best when you already have a mask), OR

- the bbox input (best when you have a bbox)

Since you’re already doing SEGS → MASK, I’d do:

✅ SEGS to MASK (single face) → SAM2Segmentation: mask

…and leave PointsEditor / coords blank.

SAM2 then “cleans up” the mask edges, which is exactly what you want.

Tiny parameter note (your adjusted detector settings)¶

Your new settings (roughly):

- threshold 0.6–0.7

- dilation 2–4

- crop_factor 1.8–2.2

That will absolutely help keep the region face-centric 👍

What I need from you (one tiny confirmation, no digging)¶

On your SEGS to MASK node: can you see any dropdown that says Combined and any alternative like Index / First / Single / By ID?

If yes, pick Index and set it to 0 for Charlie and 1 for Celeste — and you’ll feel the workflow “snap” into reliability.

[Q]

Picker (SEGS) ??

[Emily]

Yes — Picker (SEGS) is exactly what you want 😄🎯

That’s your missing “BBOX Select” equivalent in the SEGS world.

What it does¶

It takes the list of detections (SEGS) and lets you choose one of them (by index / click / rule, depending on the node). That’s how you make the pipeline deterministic for two faces.

Wire it like this (the clean chain)¶

UltralyticsDetectorProvider (face) → BBOX Detector (SEGS) → Picker (SEGS) → SEGS to MASK (NOT combined) → SAM2Segmentation (mask input) → (your Grow/Feather if you still want) → SetLatentNoiseMask → Sampler

Exactly:¶

BBOX Detector (SEGS): SEGS→Picker (SEGS): SEGSPicker (SEGS): SEGS(now single) →SEGS to MASK: SEGSSEGS to MASK: MASK→SAM2Segmentation: mask

And leave PointsEditor unhooked for this route.

How to use it (two-pass)¶

In Picker (SEGS) you’ll have some way of selecting which detection. Most commonly:

If it has an index field:¶

- Pass 1 (Charlie seated): index

0(or1— you’ll discover) - Pass 2 (Celeste standing): the other index

To discover which is which:

- Temporarily preview the mask after SEGS to MASK

- Flip index 0/1 and see which face lights up

Once you know, it stays consistent for that image.

If it’s a “click to pick” style picker:¶

- You click on the face you want, and it selects that detection. (Still much more stable than points-to-SAM2 because you’re only choosing which bbox, not drawing segmentation intent.)

One tweak I strongly recommend now¶

Once you’re feeding SAM2 with a pre-mask, you often don’t need a big Grow.

- Keep Grow small or even 0

- Keep Feather modest (enough to hide seams)

Because SAM2 is already refining edges; too much Grow can start to creep into hair/neck.

If you want, tell me what controls you see inside Picker (SEGS) (index? click? “largest”? “leftmost”?) and I’ll tell you the best setting for this two-person shot so you can pick Charlie vs Celeste without thinking every time.

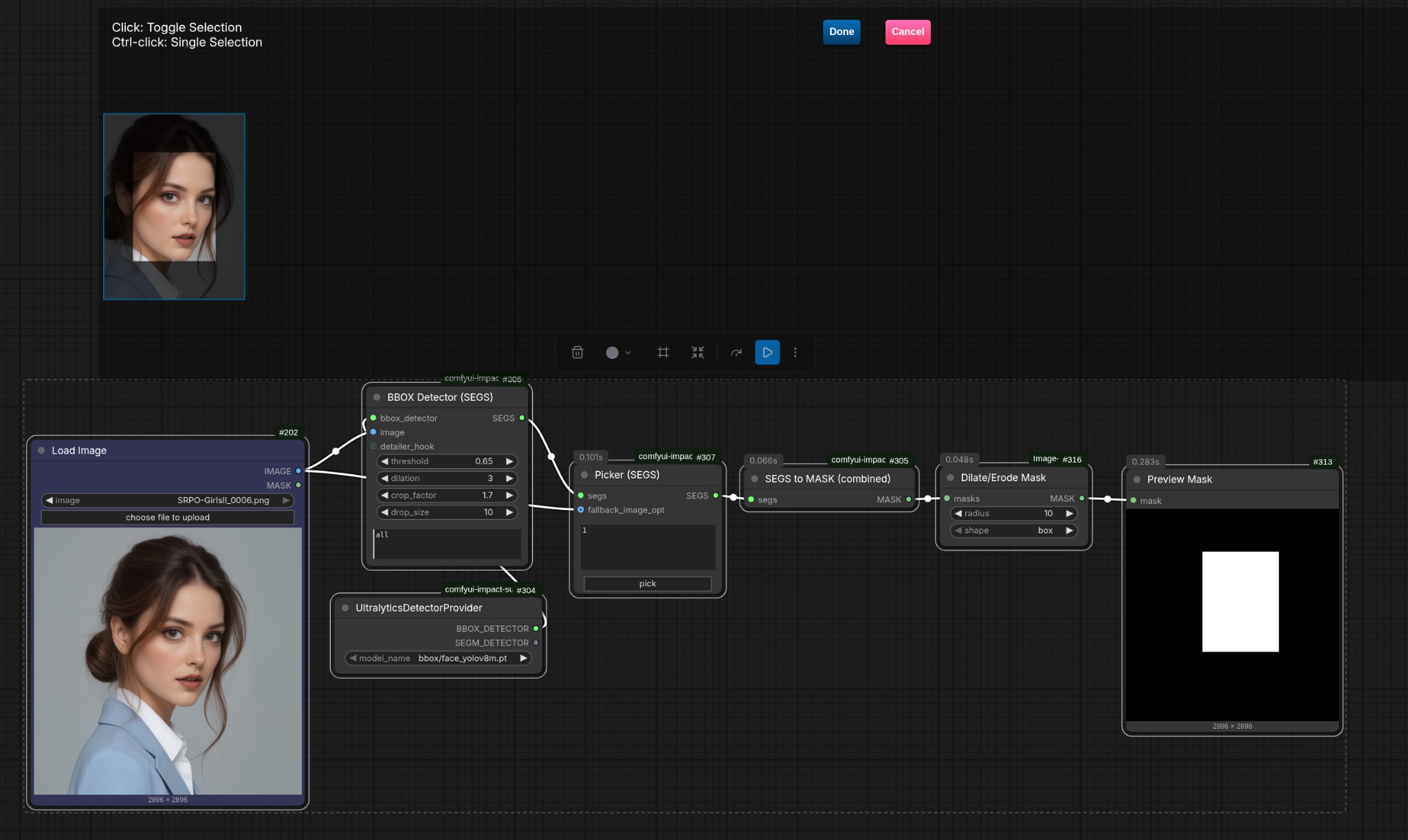

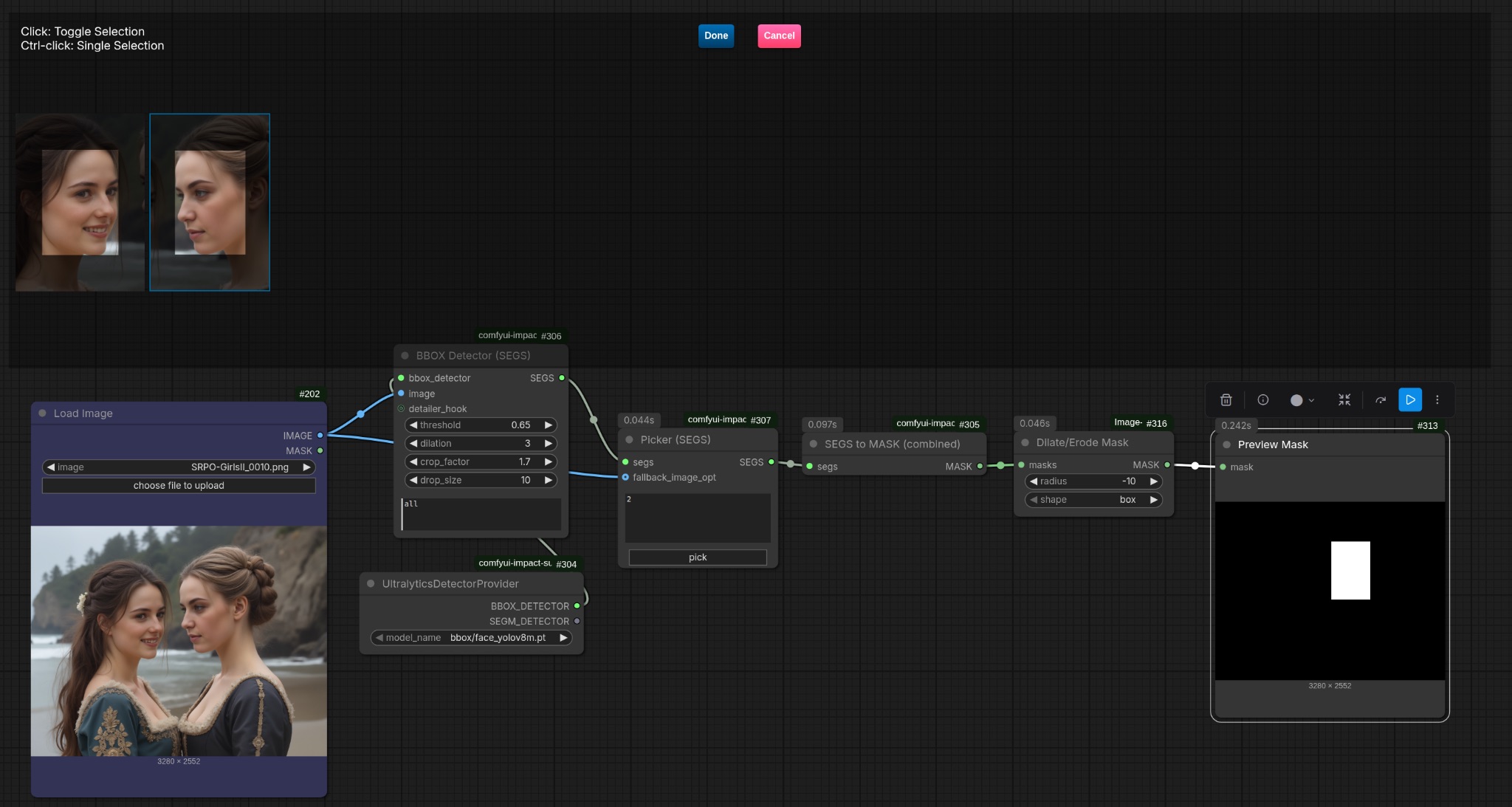

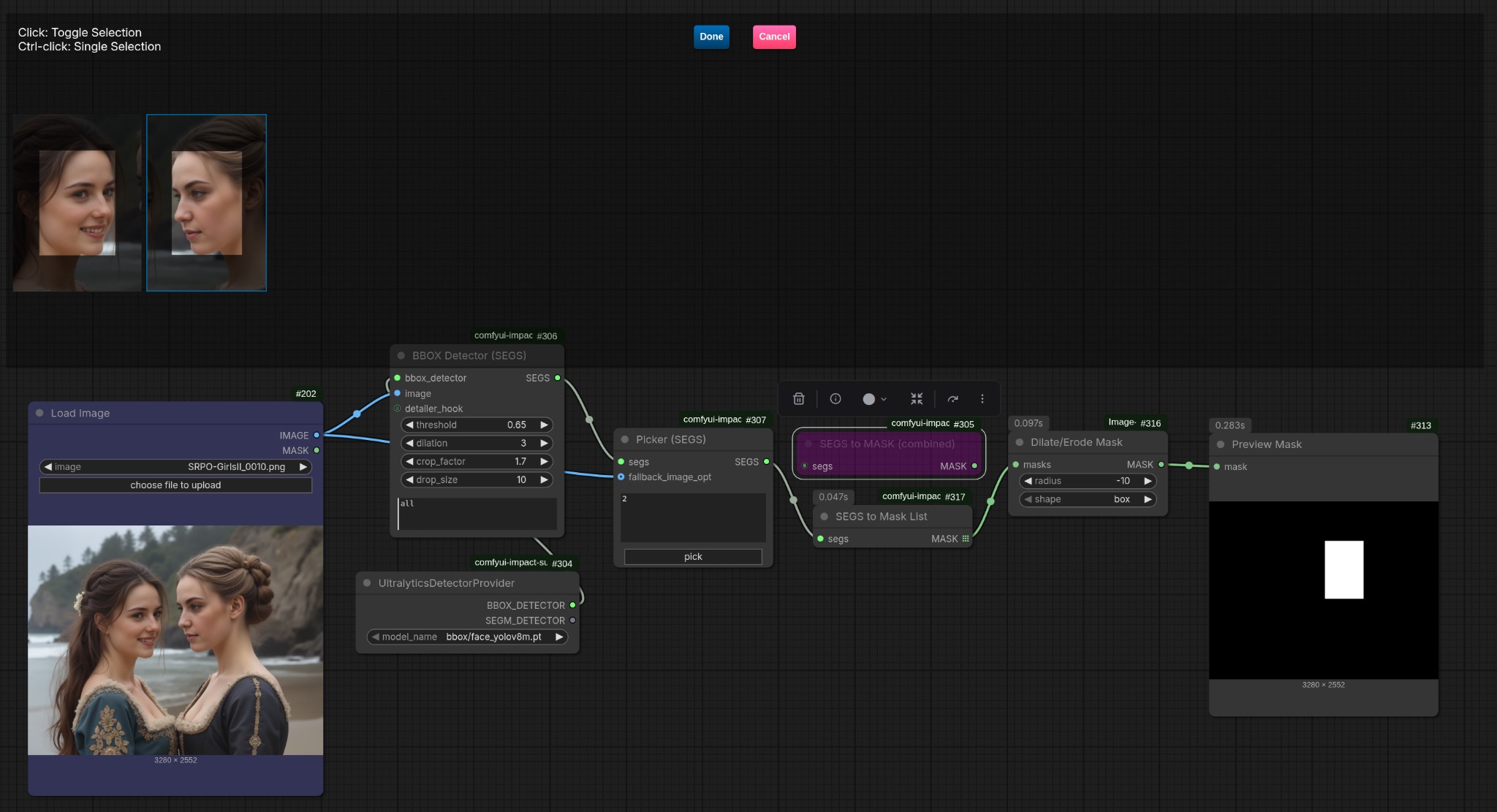

Picker (SEGS)¶

[Q]

Picker (SEGS) has two inputs: segs and fallback_image_opt. Inderneath is what looks like an input box or text box with the word "picks" in it. Underneath that, a button labelled 'pick'. Outputs: SEGS. When I click the 'pick' button, a semi-transparent rectangle appears sort-of over the nodes. At the top of the rectangle are two buttons: Done and Cancel. In the left upper-hand corner are two lines of text:

Click: Toggle Selection

Ctrl-Click: Single_Selection

When I queue this, nothing appears in the semi-transparent rectangle to select. And, the output image is empty.

[Emily]

Got it — you’re this close. What you’re seeing is exactly what happens when Picker (SEGS) doesn’t have anything to display yet, or it’s waiting for an image context to “pick on.” The result is:

- the picker overlay appears ✅

- but there’s nothing selectable ❌

- and downstream you get an empty mask/image ❌

Here are the two big fixes (and then a quick “diagnose in 30 seconds” routine). 🧷

1) Picker needs an image context: plug fallback_image_opt¶

Even though Picker’s main input is segs, it often needs the image too for the UI overlay/picking.

✅ Connect the same image you fed into BBOX Detector into:

Picker (SEGS): fallback_image_opt

So you have:

Image→BBOX Detector (SEGS): image- same

Image→Picker (SEGS): fallback_image_opt BBOX Detector (SEGS): SEGS→Picker (SEGS): segs

This alone fixes the “blank picker window” in a lot of setups.

2) You must run detection first, then pick, then run again¶

This Picker UI usually doesn’t populate until SEGS exist (i.e., after one queue has run and produced detections).

Do it in this order:

Step A — First queue (generate detections)¶

- Don’t click Pick yet.

- Queue once so the BBOX Detector actually outputs SEGS.

Step B — Click Pick¶

- Now click Pick.

- The picker overlay opens.

Step C — Select on the IMAGE (not on the node canvas)¶

The “Click / Ctrl-Click” instructions are telling you to click on the image preview (viewer panel), not on the nodes area.

- Click a detection (face/box region) in the preview to toggle selection

- Ctrl-click to select only one

Then hit Done.

Step D — Queue again¶

Now the picker has a chosen SEGS, and the rest of the pipeline should work.

3) Quick diagnostic: confirm you actually have detections¶

If your threshold is now 0.6–0.7 and the face is small/soft/angled, Ultralytics might be returning zero faces. If there are no SEGS, Picker has nothing to show.

Fast test:

-

Temporarily bypass Picker:

-

BBOX Detector (SEGS): SEGS→SEGS to MASK - Set SEGS to MASK to Combined (just for this test)

- Preview that mask.

If the combined mask is blank, you have no detections.

If it’s blank, change one thing:¶

- Drop threshold back to 0.35–0.45 (just to confirm detections exist)

Once you can see faces again in the combined mask, then you can raise threshold gradually.

4) Why your output image went empty¶

Downstream, SAM2 / mask conversion likely got an empty mask, so your SetLatentNoiseMask / compositing ended up with “nothing to apply,” which can yield blank previews depending on which node you’re viewing.

That’s consistent with: “Picker selects nothing → mask becomes empty → output looks empty.”

Your “do this now” checklist ✅¶

- Connect

fallback_image_optto the same image as the detector. - Queue once before using Pick.

- Click Pick, then click in the image preview, not the node canvas.

- If still empty: lower threshold to ~0.4 and verify Combined mask shows detections.

If you can tell me one tiny thing: when you queue the detector (without picker), does SEGS to MASK (Combined) show any face regions?

- If yes: picker wiring/sequence is the issue (easy fix).

- If no: it’s a detection threshold/model issue (also easy fix).

[Q]

What I've done is:

1) bypassed Picker, so, like I had it before BBox -> SEGS to Mask ->

2) Sam2Segmentation -> GrowMask -> Convert Mask 2 Image -> Preview

Which is black: nothing detected.

So, I tried sam2_hiera_ base... detected all sorts of stuff, not just face. Went back to _small... nothing detected.

[Emily]

Aha — the key discovery is this:

If BBOX Detector → SEGS to MASK is black, then nothing downstream (SAM2 / Grow / preview) matters yet.

Because SAM2 can’t “refine” a face region that doesn’t exist. The face detector is returning zero detections.

Also: switching SAM2 models (_small vs _base) doesn’t affect what the Ultralytics BBOX detector finds. Those are completely separate stages. So your “_base detects all sorts of stuff” was almost certainly SAM2 doing its own thing when driven by points/bbox — not the Ultralytics face detector suddenly working.

Let’s fix the face detector first ✅

1) Make Ultralytics actually detect something (temporary “paranoid” settings)¶

On BBOX Detector (SEGS) set these just for a test:

- threshold:

0.15(yes, low — we’re proving the pipeline) - drop_size:

0 - dilation:

0 - crop_factor:

1.2(not 3.0) - leave hook empty

Then run:

Image → BBOX Detector (SEGS) → SEGS to MASK (Combined) → Preview

If that’s still black, Ultralytics face_yolo8m is not detecting faces in this image at all (model mismatch, resolution issue, or it simply doesn’t like the scene).

If it shows anything now, we can tighten threshold back up afterwards.

2) Check you’re feeding the detector the right image (this bites a LOT)¶

Make sure the detector is seeing an actual RGB image, not a latent, not a post-processed preview, not something resized in a different branch.

Best practice:

-

Feed the exact same image wire into:

-

BBOX Detector (SEGS): image SEGS to MASKSAM2Segmentation

No extra resizes between them for now.

3) The “stop fighting it” solution: use InsightFace / FaceDetailer as the face detector¶

You said you have InsightFace and FaceDetailer installed — that’s great, because for faces they’re often more reliable than Ultralytics in awkward angles / DOF / partial occlusion.

What you want is:

- InsightFace/FaceDetailer detects the face bbox

- You convert that to a mask (rectangle is fine)

- Then SAM2 refines it (optional)

- Then SetLatentNoiseMask clamps the swap

Why this fixes your current mess¶

Ultralytics is giving you nothing. InsightFace is designed specifically for faces and tends to detect when YOLO-face models fail.

So: if Ultralytics continues to return black masks even at threshold 0.15, I’d switch the detection stage to InsightFace and keep SAM2 for refinement.

4) About which SAM2 model: keep using sam2_hiera_small¶

Once detection works, sam2_hiera_small is still my recommendation for this “face-only refinement” job:

- fast

- stable

- plenty accurate

Use base only if you’re unhappy with edge fidelity after things are working.

But again: SAM2 is not the blocker right now — detection is.

5) Quick diagnostic question (one line)¶

When you lowered threshold to 0.15: did SEGS→MASK show anything at all (even wrong stuff), or still pure black?

- If still black → ditch Ultralytics for detection; use InsightFace/FaceDetailer bbox/mask.

- If something appears → great; then we add Picker (SEGS) to select one face, and we’re back on rails.

If you tell me the exact node names you have for FaceDetailer / InsightFace detection (even just what shows in the Add Node menu when you type “face” or “insight”), I’ll give you the exact wiring to produce a single-face mask that never drifts 🧷✨

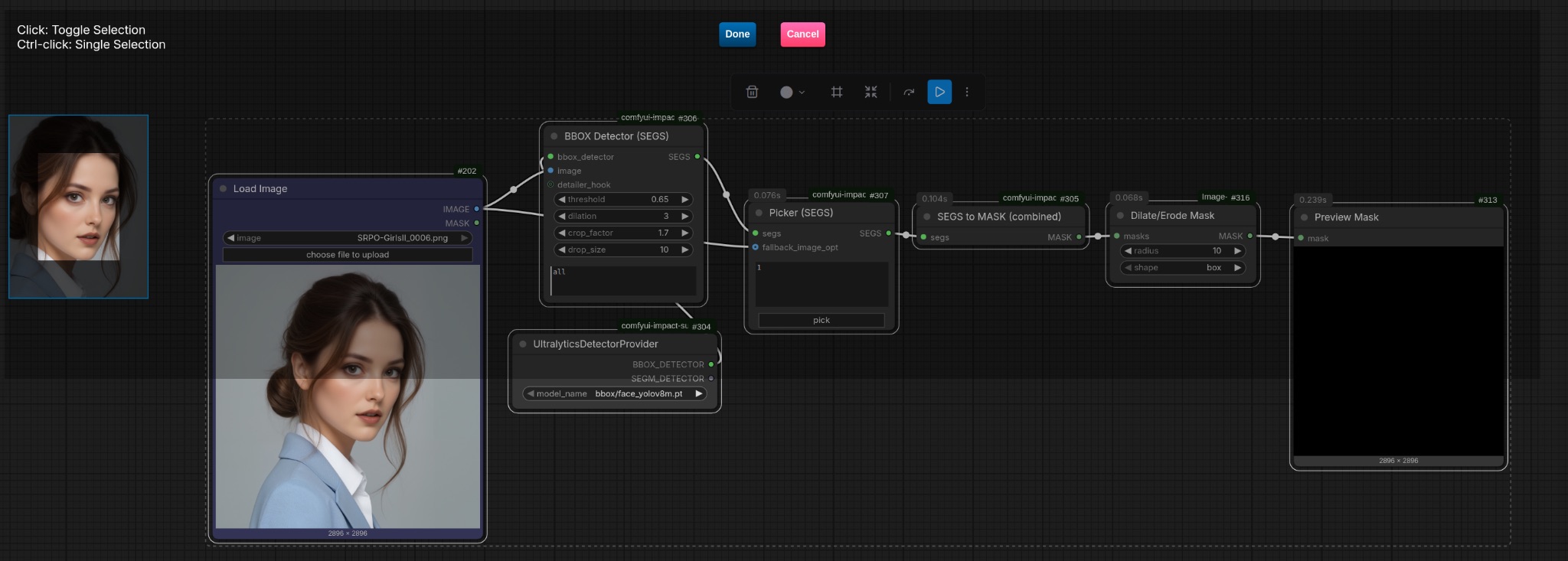

Image Wiring¶

[Q]

Yup, my mistake, Emily. For BBox_Detector, I was wired directly to LoadImage. But for Sam2Segmentation, it was LoadImage -> ImageScaleToTotalPixels -> Sam2Segmentation. Oops.

[Emily]